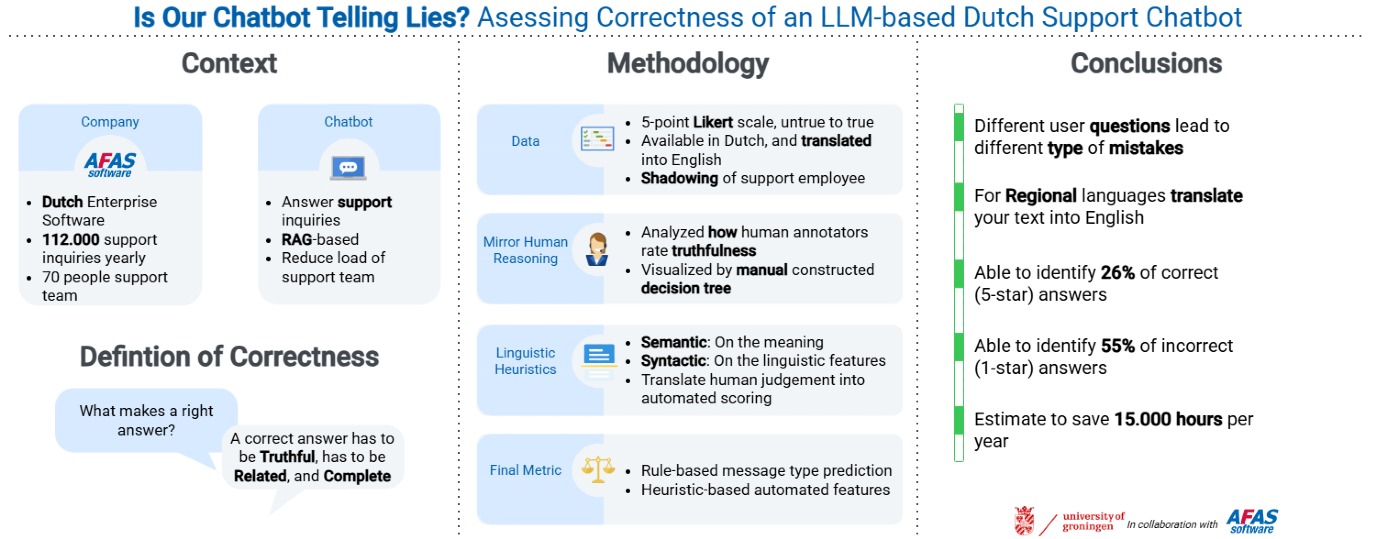

New framework verifies AI-generated chatbot answers

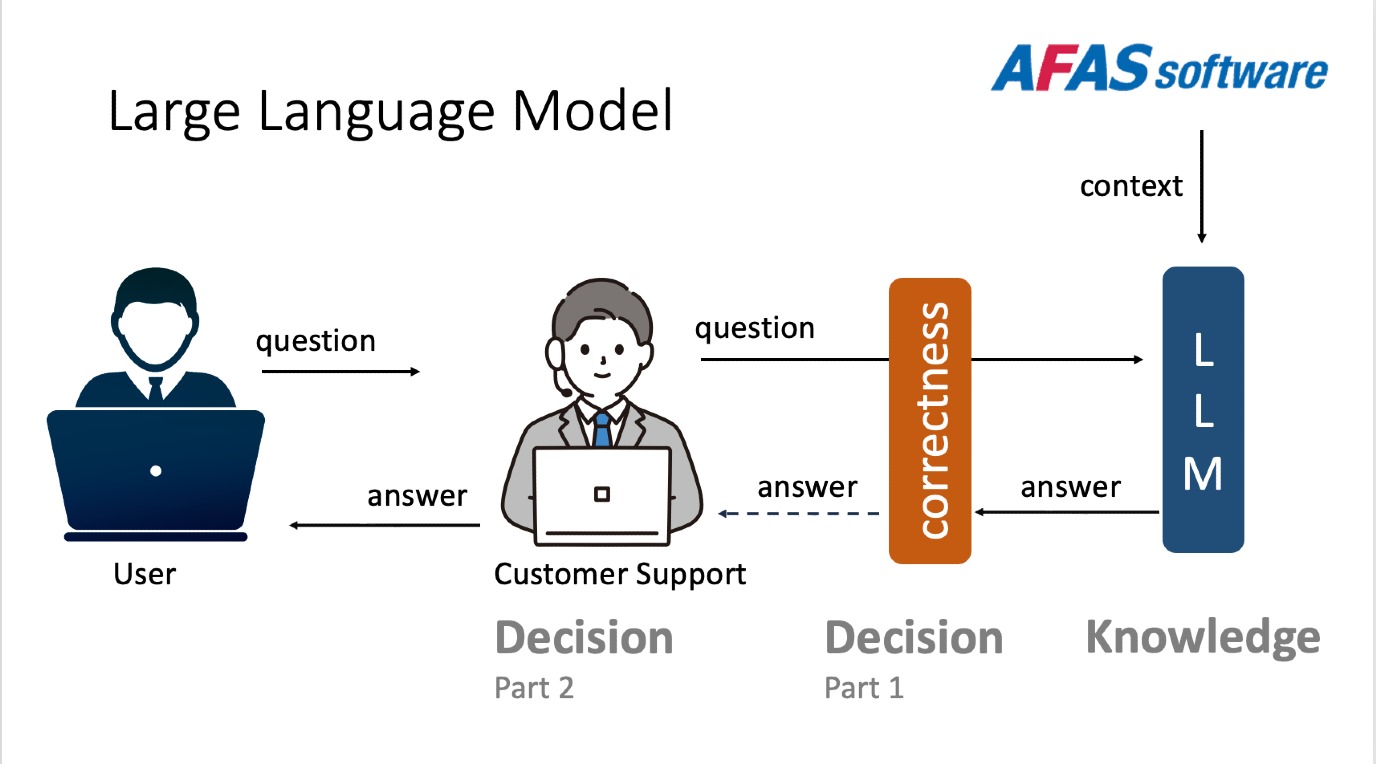

How do you know if a chatbot is giving the correct answer? This is an important question for companies that use Large Language Models to communicate with their customers. The Dutch company AFAS was using chatbots to generate answers, which had to be checked by a human employee before being sent to the customer. To speed up this process, they collaborated with a research team from the University of Groningen, developing a framework that verifies AI-generated answers. This system mirrors how human experts currently assess correctness, and relies on internal documentation as its knowledge base.

FSE Science Newsroom | René Fransen

In practice, incorrect answers are often easier to spot than correct ones. So a framework that quickly filters out clear mistakes can significantly speed up the human evaluation process. But the more interesting scientific challenge lies in a different direction: an answer that appears correct may still miss crucial nuances, revealing a gap between apparent correctness and genuine correctness.

Saving up to 15,000 working hours

The researchers spent a day with the AFAS support staff to observe and interactively understand what makes them decide whether a response is correct. They then combined this with domain-specific information in the form of internal documentation. Initial results show strong potential for this approach: for straightforward yes/no and instruction-type queries, it could save up to 15,000 working hours each year.

The AI system is able to judge correctness even in situations it was not explicitly trained for. This points to a broader scientific opportunity: building AI evaluators that can generalize their judgement across new tasks by mimicking how human experts reason, rather than relying solely on pattern matching.

Furthermore, the results show the importance of contextual, organization-specific knowledge that ultimately determines whether an AI model’s decision is accurate and useful. Author Ayushi Rastogi: ‘For industry, this means that investing in well-structured internal documentation and domain expertise is just as important as deploying advanced AI models. Without that foundation, even the smartest AI model cannot deliver trustworthy, actionable outcomes.’

Reference: Herman Lassche, Michiel Overeem, Ayushi Rastogi: Is our chatbot telling lies? Assessing correctness of an LLM-based Dutch support chatbot. Journal of Systems and Software, online 2 December 2025.

More news

-

17 February 2026

The long search for new physics

-

10 February 2026

Why only a small number of planets are suitable for life