How is neuromorphic touch transforming robotics?

What babies, robots, and brain-inspired computing have in common

Did you know that in humans, touch is the first sense to develop? As early as seven weeks into pregnancy, a baby begins exploring its surroundings through tactile sensations. Touch isn’t just essential for human development — it’s also crucial in robotics.

Robots rely on touch for much more than gripping objects. It plays a role in delicate manipulation, safe navigation, and even in building friendly and trustworthy relationships with humans. And yet, most traditional artificial intelligence systems have focused on vision and hearing. So why has touch been overlooked? And how is neuromorphic computing helping to change that?

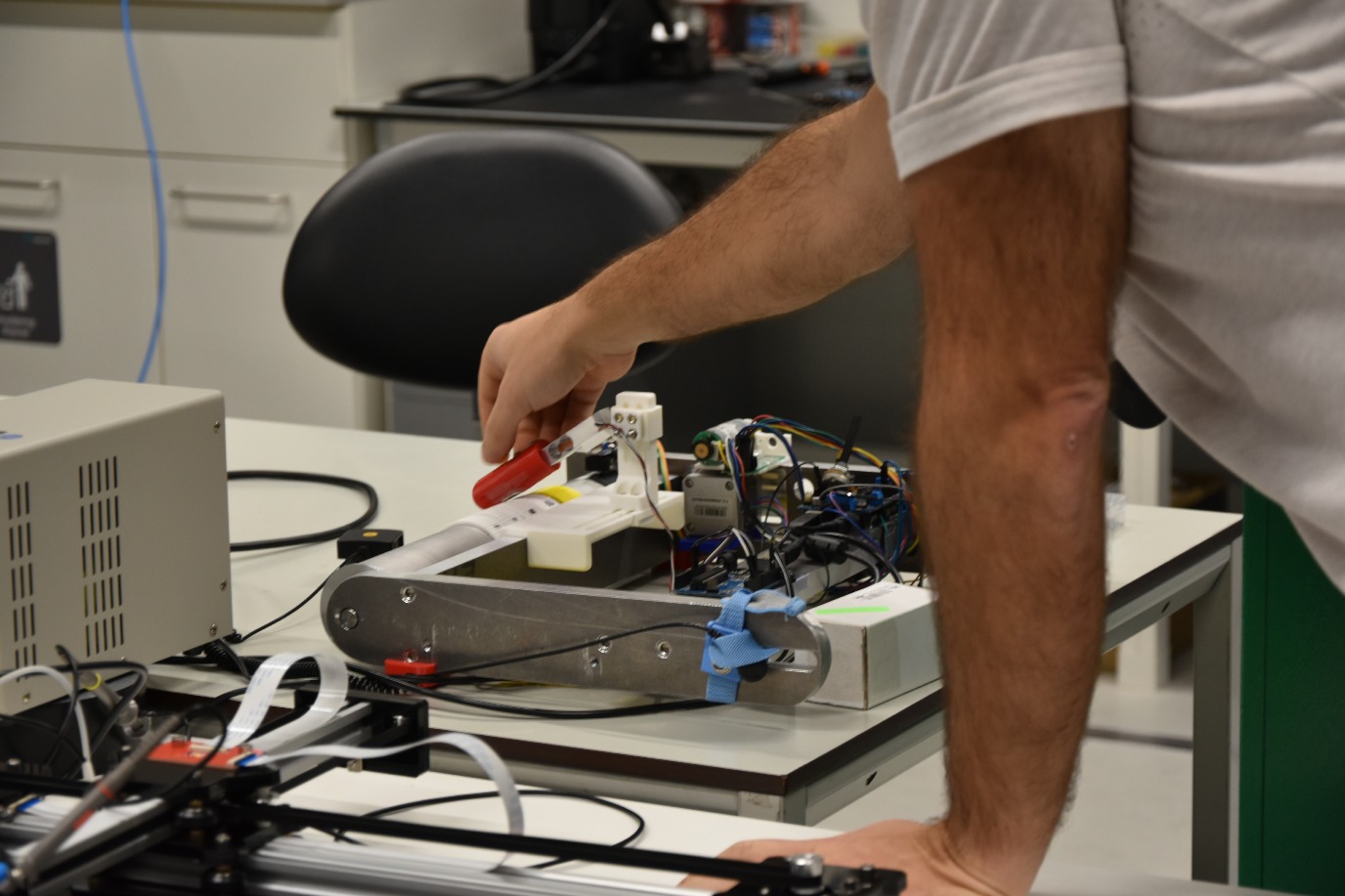

At a recent CogniGron@Work session, CogniGron researcher Alejandro Pequeño Zurro talked about neuromorphic touch: a brain-inspired approach to sensing that’s reshaping how robots experience the world.

Why touch hasn’t been a priority (until now)

Replicating human touch is an enormous challenge. Our hands are incredibly sensitive, packed with around 17000 mechanoreceptors (tactile sensors) per single hand. This level of detail allows us to feel pressure, texture, and motion. Trying to replicate this in a robot is already tough due to physical constraints, but the bigger challenge is what happens next: all those sensors produce a huge amount of data.

In traditional AI systems, tactile sensors typically send signals to a processor every few milliseconds, whether there’s a change or not. Even if the surface being touched hasn’t shifted or moved, the sensor still sends its reading. Multiply that by thousands of sensors, and it’s easy to see how the system becomes overwhelmed: it’s a constant flood of repetitive information, and that makes things inefficient. Processing all of it eats up power, slows down the robot’s response time, and creates a backlog of unnecessary data.

Thinking like our nervous system

Neuromorphic touch takes a completely different approach. Inspired by how the human body and brain work, this method focuses on what really matters: change.

Alejandro offered a useful comparison: if you touch the palm of your hand, you only want information from your palm, not from your elbow. Neuromorphic sensors follow the same logic. Instead of reporting at fixed intervals, they activate only when a change occurs. This is known as event-based sensing.

This method has two key advantages. First, it dramatically reduces the amount of data that needs to be processed. Fewer signals means less energy consumption and lower computational demands. Second, it improves responsiveness. Since the system reacts in real time to meaningful input, there’s no need to wait for the next scheduled update — the signal gets through the moment something changes. Let’s say, for instance, that a traditional sensor sends data every two milliseconds. But what if something important happens between those updates? The system wouldn’t notice, and the moment would be lost. Neuromorphic sensors don’t have that problem. They pick up the change right as it happens.

The slippery pen problem

Here’s a practical example to bring it all together. Imagine a robot is asked to lift a pen. It grips the pen successfully, but then, the pen starts to slip. The sensors detect the slip, but the system doesn’t respond in time, and the pen falls.

What happened? The processor was flooded with sensor data, and the algorithm couldn’t sort through it fast enough to act. In a neuromorphic setup, the change in pressure would have triggered an immediate signal — allowing the robot to adjust its grip in time.

A better sense of touch builds better relationships

Neuromorphic touch also has the potential to improve how robots relate to humans. In a collaboration between CogniGron and the University of Southern Denmark, researchers are exploring social robotics — particularly how tactile interactions influence trust.

In a recent pilot study, a robot that could deliver a personalized handshake was rated as more friendly and trustworthy by participants. That kind of natural interaction could be especially important in elderly care or therapy settings, where robots need to feel approachable as well as functional.

Feeling textures, saving lives

And it doesn’t stop there. Neuromorphic touch could also play a critical role in healthcare — particularly in surgical robotics. In an operation, it’s crucial to be able to recognise different textures and levels of resistance. But again, traditional sensor systems produce far too much data to be processed quickly and accurately.

Neuromorphic systems make real-time tactile sensing much more feasible. They bring us closer to surgical robots that can not only cut and hold — but feel what they’re doing. Here at CogniGron, we’re not just making machines smarter. We’re helping them feel the world more like we do. That means more efficient robots, more intuitive human-robot interaction, and, in some cases, technologies that can save lives.