Large-scale computing

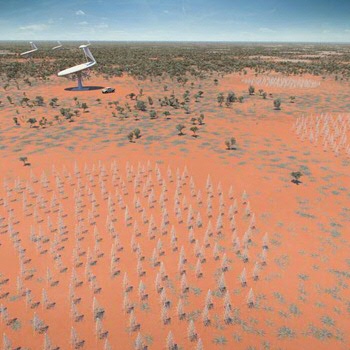

A characteristic of the big data era is that, although computational power has been increasing exponentially (Moore’s law), the same is true for data size, which grows with a much larger rate constant. For example, the Australian/South African Square Kilometre Array (SKA) of radio telescopes project or CERN’s Large Hadron Collider are capable of generating several petabytes (PB) of data per day. The calibration of SKA, involving simultaneous modelling of the sky and the ionosphere, might be one of the largest and most complex optimization problems of the coming 10 years. The target computing power of the Human Brain Project is in the exascale regime. In addition, there are clear signs that Moore’s law is breaking down (Jagadish et al., Big data and its technical challenges, Commun. ACM 57(7), pp. 86–94, 2014). Techniques such as parallel and high performance computing, GPU computing, multi-core computing, or cloud computing will be of help here. However, for many applications the real bottleneck is not processing time but retrieval time from memory. Parallelization is only effective when the connectivity between parts of the systems is kept low, so that effective data partitioning can be achieved and memory transfers are minimized. Techniques are required which compute ‘within the database’. An even more fundamental problem concerns computational complexity, i.e., the fact that some problems scale nonlinearly (sometimes even exponentially) with increasing data size. For example, if an algorithm scales as 2N where N is the number of data objects, then doubling compute power is not of much help. In that case the judicious use of approximations and heuristics or developing entirely new algorithmic approaches is the only way forward. This raises a problem especially for unstructured data which tend to be highly connected.

Key questions:

-

Can we develop new algorithmic approaches to tackle the increasing mismatch between the rates at which data increase and the increase of computational power?

-

How can we design optimal data partitioning for highly connected systems?

-

Can we engineer fast and reliable data transfer mechanisms for big data?

-

How can we parallelize software in multi-core computing to optimize platform usage in software engineering?

-

Can we design real-time techniques for pruning and compressing raw data volumes on the fly?

-

How can we reduce the computational complexity of computation with big data with growing size of the number of components?