Artificial intelligence in healthcare

The rise of artificial intelligence (AI) offers new opportunities for healthcare. From analysing medical scans to predicting disease progression, AI can support doctors in making complex decisions. But how reliable are these models? And how can we ensure that AI enriches medical practice without losing the human factor?

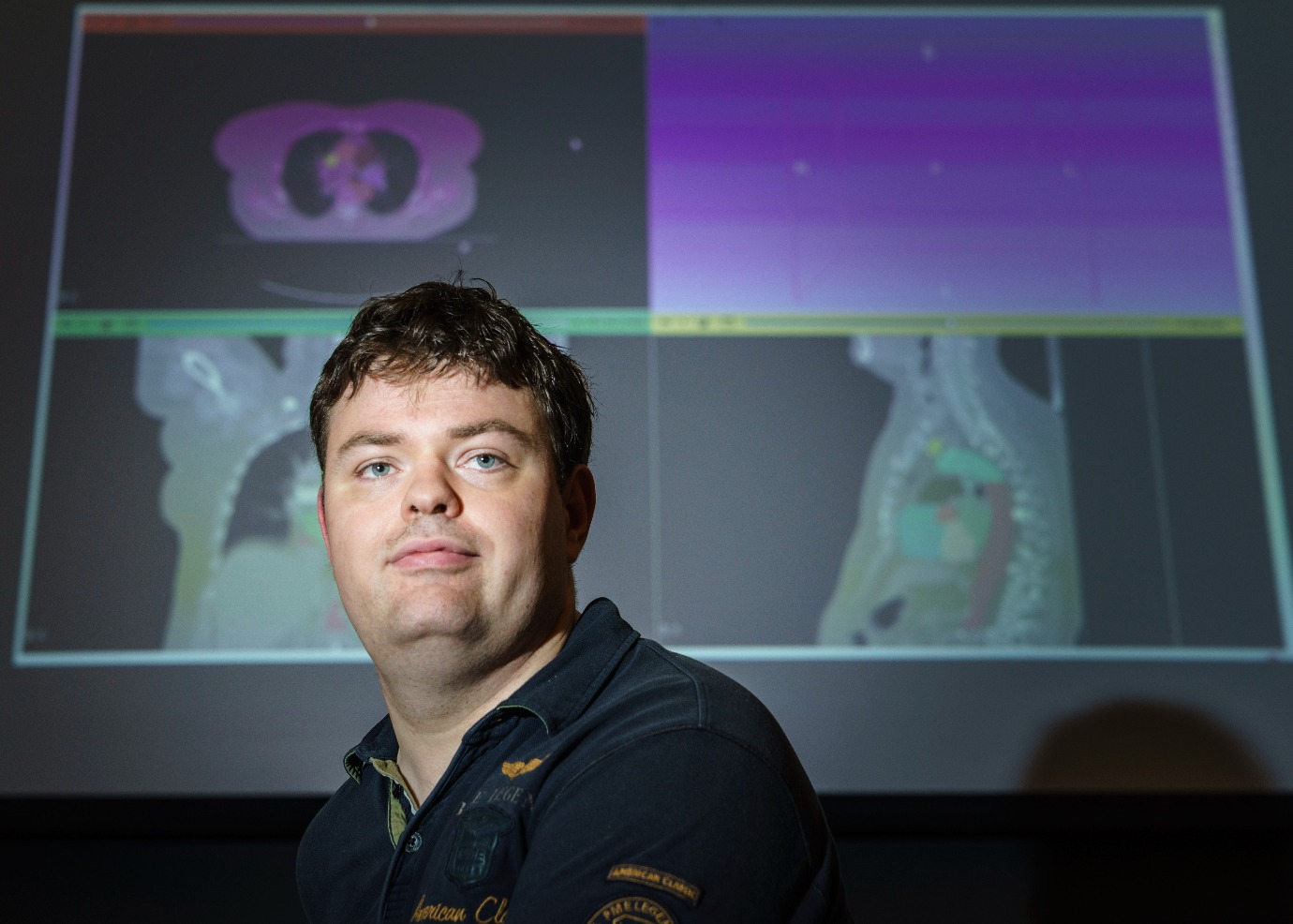

These are the questions that Steff Groefsema, PhD researcher at the Bernoulli institute and the University Medical Centre Groningen (UMCG), is working on. Together with Dr Matias Valdenegro Toro, Professor Peter van Ooijen and Dr Engr Charlotte Brouwer, he is investigating how AI can be used ethically in healthcare as part of the project ‘Ethical uncertainty in medical AI’. This research focuses not only on the technical performance of AI, but above all on the uncertainties within the models and their impact on medical decision-making.

What makes the project special is that Steff is not only a researcher, but also a co-applicant and co-creator of this project. His involvement from the very beginning means that he builds a unique bridge between the technological development of AI and the needs of medical practice.

AI as an assistant, not a replacement

The role of AI in healthcare is growing rapidly, but how do we ensure that this technology supports doctors without undermining human judgement? According to Steff, the power of AI lies primarily in collaboration. ‘AI can recognise patterns in medical scans and support doctors, but it does not take over the full judgement of a human being,’ he explains. ‘For example, a model may work well for a certain group of patients, but that does not automatically mean that it is also reliable for others. Think of differences between men and women, or between patients from different ethnic backgrounds.’

It is precisely this uncertainty within AI models that makes their application in medical practice challenging. ‘A doctor must be able to rely completely on a diagnosis,’ says Steff. ‘But when an AI model makes an assessment, there is always a degree of uncertainty. Our research focuses on how we can make this uncertainty transparent, so that doctors do not see AI as an inscrutable “black box”, but as a tool that supports them in making better decisions.’

Uncertainty in AI: How big is the risk?

The core of Steff's research revolves around mapping margins of uncertainty within AI models. How reliable are the predictions? How do you ensure that an AI model indicates when it doesn’t fully know the answer? And how can doctors make an assessment when they can trust AI and when extra vigilance is needed?

‘Take cancer treatments, for example,’ explains Steff. ‘An AI model can detect a tumour as well as the organs that may be at risk from radiation. But if that prediction is not 100% certain, as a doctor you want to know where the uncertainty lies. Our goal is to visualise this uncertainty, for example by using colour gradations on medical scans. This way, a radiotherapist knows exactly which areas he or she needs to check extra carefully.’

Recent research shows that AI does not always work equally well for everyone. ‘There are AI models that perform better on men than on women, simply because more data is available from male patients,’ says Steff. ‘In skin cancer diagnoses, we also see that AI is less accurate in people with darker skin tones. These are worrying developments that we need to take into account.’

Collaboration between technology and medical experts

The ‘Ethical uncertainty in medical AI’ project is interdisciplinary and brings together AI specialists and medical professionals. Steff works closely with clinical physicists and radiotherapists, as well as experts such as Fokie Cnossen, who specialises in human-machine interaction.

‘The input of doctors is crucial,’ Steff emphasises. ‘They know what is really important in practice. Our research is therefore not only theoretical; we test the models together with medical experts to see how they actually contribute to better care.’

This is done in collaboration with the Netherlands Cancer Institute, among others, where the team is investigating how AI models behave in different patient groups. ‘If a model has been trained on patients in Groningen, will it also work well on patients in Amsterdam? Or is there a difference? These are questions we are trying to answer.’

Future prospects: How far can we go?

Although AI already plays a role in healthcare, Steff believes we are still a long way from full automation. ‘The idea that a patient walks into the hospital, has a scan done, and AI immediately makes a diagnosis without human intervention? We are still a long way from that. AI works best as a support, not as a replacement for the doctor.’

What is possible, however, is simplifying repetitive tasks. ‘For example, a radiotherapist now receives a CT scan in which the AI has already marked organs and possible tumours. This saves time and helps the doctor to work faster and more accurately. That's where AI really adds value.’

Man and machine: The ultimate collaboration

The research conducted by Steff Groefsema and his team shows that AI offers enormous possibilities, but that a critical eye remains essential. By highlighting uncertainties within AI and involving medical experts in its development, they are working towards a future in which AI and humans reinforce each other.

‘AI will never completely take over healthcare, and that's a good thing,’ Steff concludes. ‘The power of the technology lies in collaboration with the expertise of doctors. Only then can we truly improve healthcare.’

This article is based on an interview with Steff Groefsema and the study ‘Ethical uncertainty in medical AI’, conducted by the Bernoulli Institute and the UMCG.

This project is funded by the Ubbo Emmius Fund and is embedded in the Jantina Tammes School.

Text: Djoeke Bakker

Image: Reyer Boxem

More news

-

15 September 2025

Successful visit to the UG by Rector of Institut Teknologi Bandung